Robots.txt is a text file present in the root directory of a site which is used to control which pages are indexed by a robot. If you use the 'disallow' word you can block parts of your sites to be found by search engines.

Step 1. Open the link http://www.google.com and search for the keyword :

"robots.txt" "disallow:" filetype:txt

Step 2. You will find the robots.txt file from sites that uses disallow command in it.

Step 3. Let's open for example the first site: WhiteHouse. We can see that a lot of pages were made invisible.

Step 3. Let's open for example the first site: WhiteHouse. We can see that a lot of pages were made invisible. Step 4. To open 'forbidden' pages just copy the text from what disallow command you want, without the "text" at the end.

Step 4. To open 'forbidden' pages just copy the text from what disallow command you want, without the "text" at the end. Step 5. Now replace in the browser /robots.txt with your copied text and press Enter. The page will open.

Step 5. Now replace in the browser /robots.txt with your copied text and press Enter. The page will open.

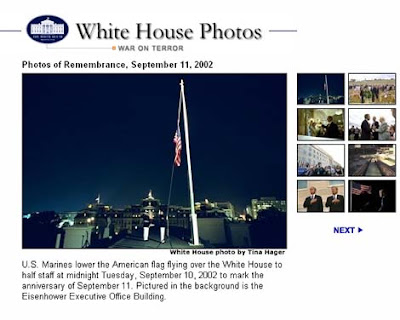

This is the hidden page from WhiteHouse.

There are many more interesting sites which you can unlock using this tutorial. Happy unlocking!

There are many more interesting sites which you can unlock using this tutorial. Happy unlocking!

No comments:

Post a Comment